We want to convert the multi-party call into a single low-latency stream that can be broadcast to thousands of concurrent viewers.

Step 1: Compose multiple WebRTC streams into one single stream

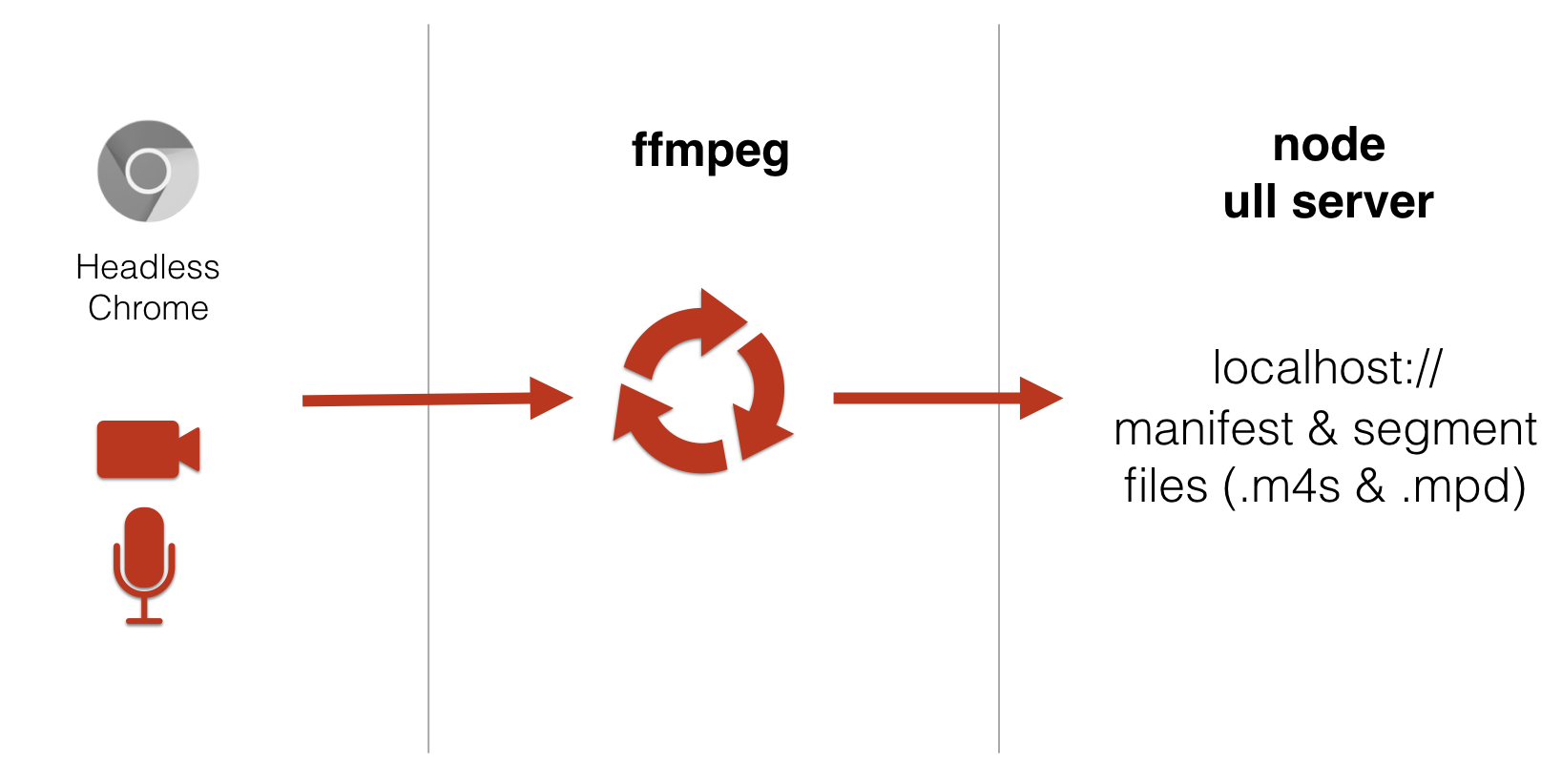

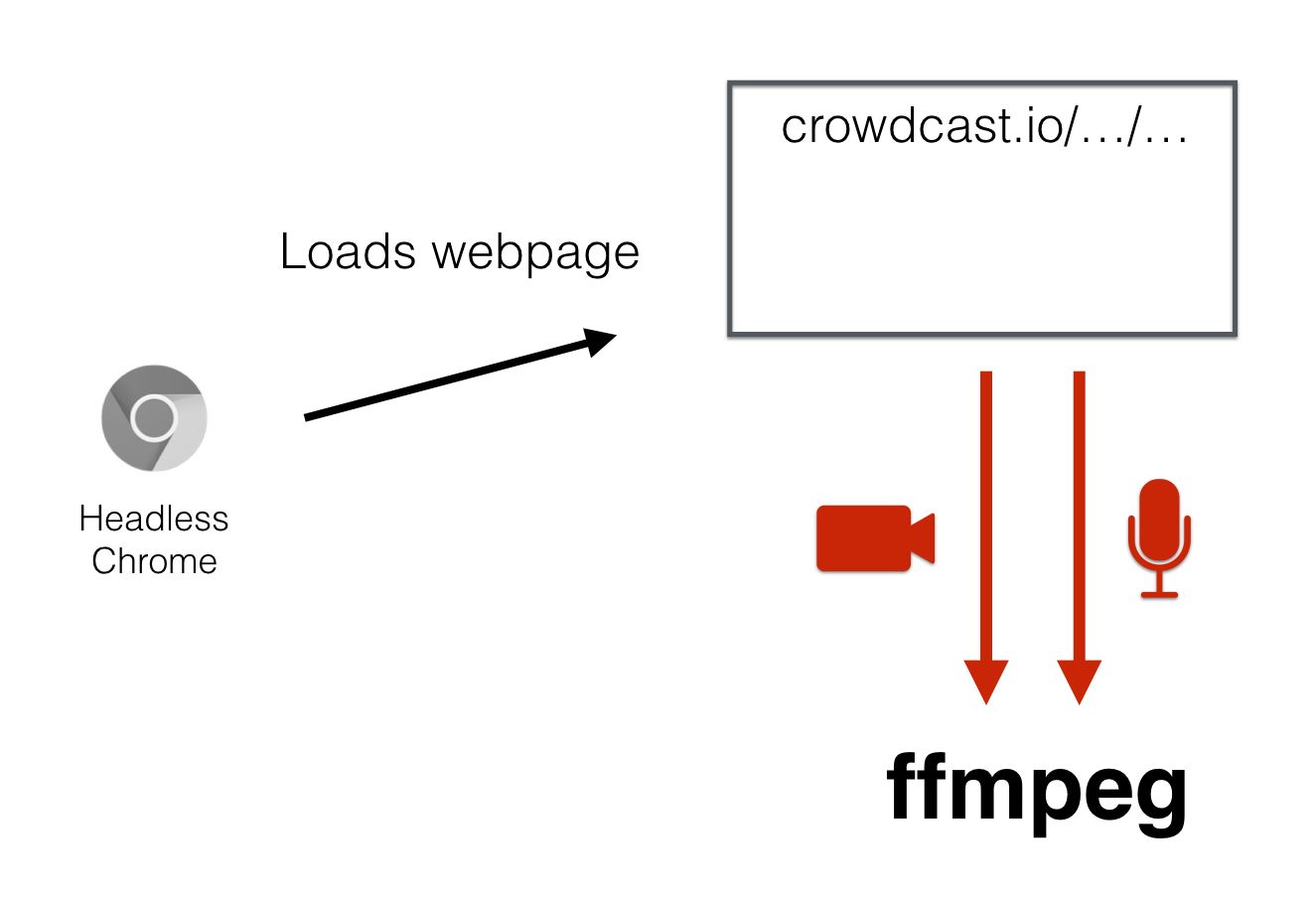

For this, we reached for Headless Chrome and started seeing if we can pull out the audio and video from the browser and feed it into something like ffmpeg. Imagine a docker container that looks like this:

headless chrome to ffmpeg

This was no small task. It took us about 2 months to tweak the setup and figure out the configuration that would give us a good result. In the end, we have a docker container running on an ec2 server. The docker container is running headless chrome, loading a webpage and feeding audio and video media into ffmpeg.

At this point, the media is not being broadcast, ffmpeg is transcoding and writing locally to a file and we can see that the audio sounds good and the video is good at about 30fps. Step 1 complete!

Why headless Chrome?

Avoid ingesting & composing multiple streams.

Chrome gives us full layout control with html/css/js

Step 2: Broadcast at Low Latency

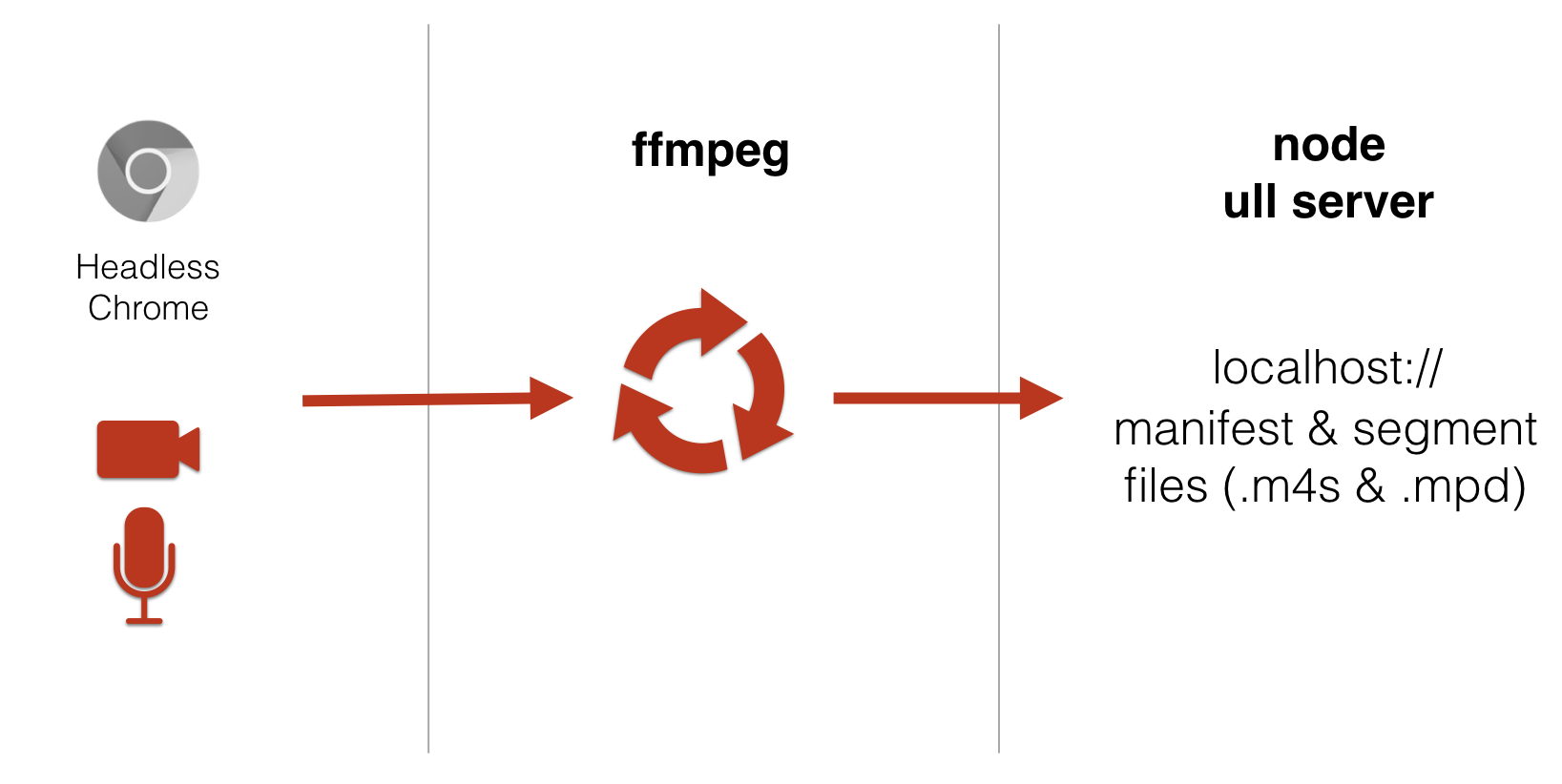

Now that we have ffmpeg transcoding the media that comes from the browser, we need to output in a DASH/CMAF format. Now the docker container is going to look like this. Instead of outputting to a local file, ffmpeg will upload the manifest and segment files to a node server running on localhost://.

headless chrome to ffmpeg to localhost node server

Let's dive deeper into CMAF and see how it works and how it helps us achieve low latency over HTTP.

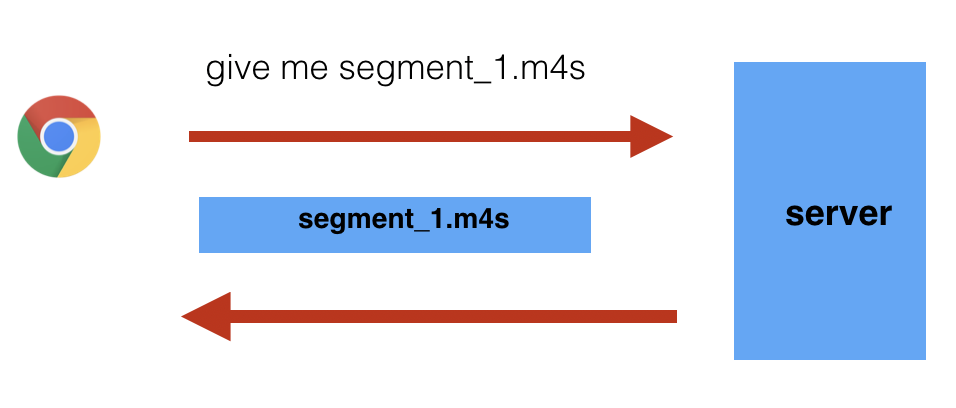

Generally speaking, this is how video streaming over http works:

The player requests a video file. Let's stay this file represents 6 seconds of video content

The server serves this file to the player and the player keeps it in a buffer and they plays it at the right time.

player: request file / get file

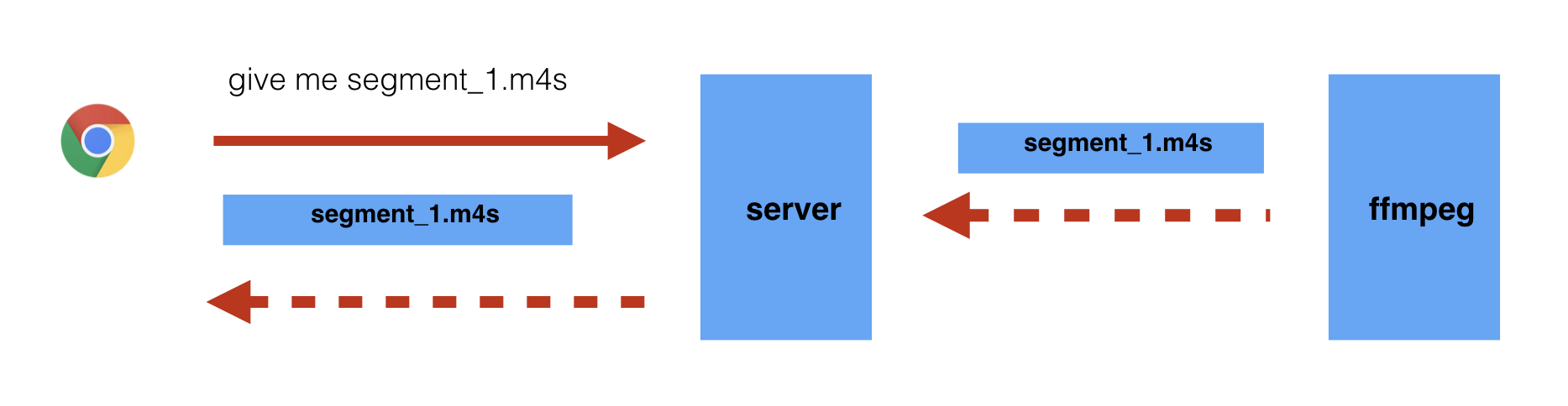

Now let's push the limits, let's say the browser requests the file "segment_1.m4s" and the server doesn't have the file yet, well, then the server can't serve the file, right?

Well, maybe. What if the server doesn't have the "segment_1.m4s" file yet, BUT it almost has the file. The transcoder is uploading the file using http-chunked-transfer encoding at the same moment the file is being requested by the player.

The "segment_1.m4s" file is broken up into small http chunks and is in the process of being uploaded to the server. So the server has parts of the file, but not the whole thing yet.

What we can do is implement a simple http server with node.js + express to work with this. As the file is being uploaded by the transcoder the file is simultaneously being downloaded by the player. Both the upload and the download are transferred over http-chunked-transfer-encoding. So it will actually look like this (the dotted line represents http-chunked-transfer-encoding).

player: request file stream file

We're almost there! We have a ffmpeg transcoding to Dash/CMAF, uploading to a server and a player is able to stream the video at about 3s latency.

Where does the remaining 3s latency come from?

- Realtime to the browser over WebRTC is ~300ms

- ffmpeg transcoding is ~500ms

- The rest is player buffer

Step 3: Put a CDN on it

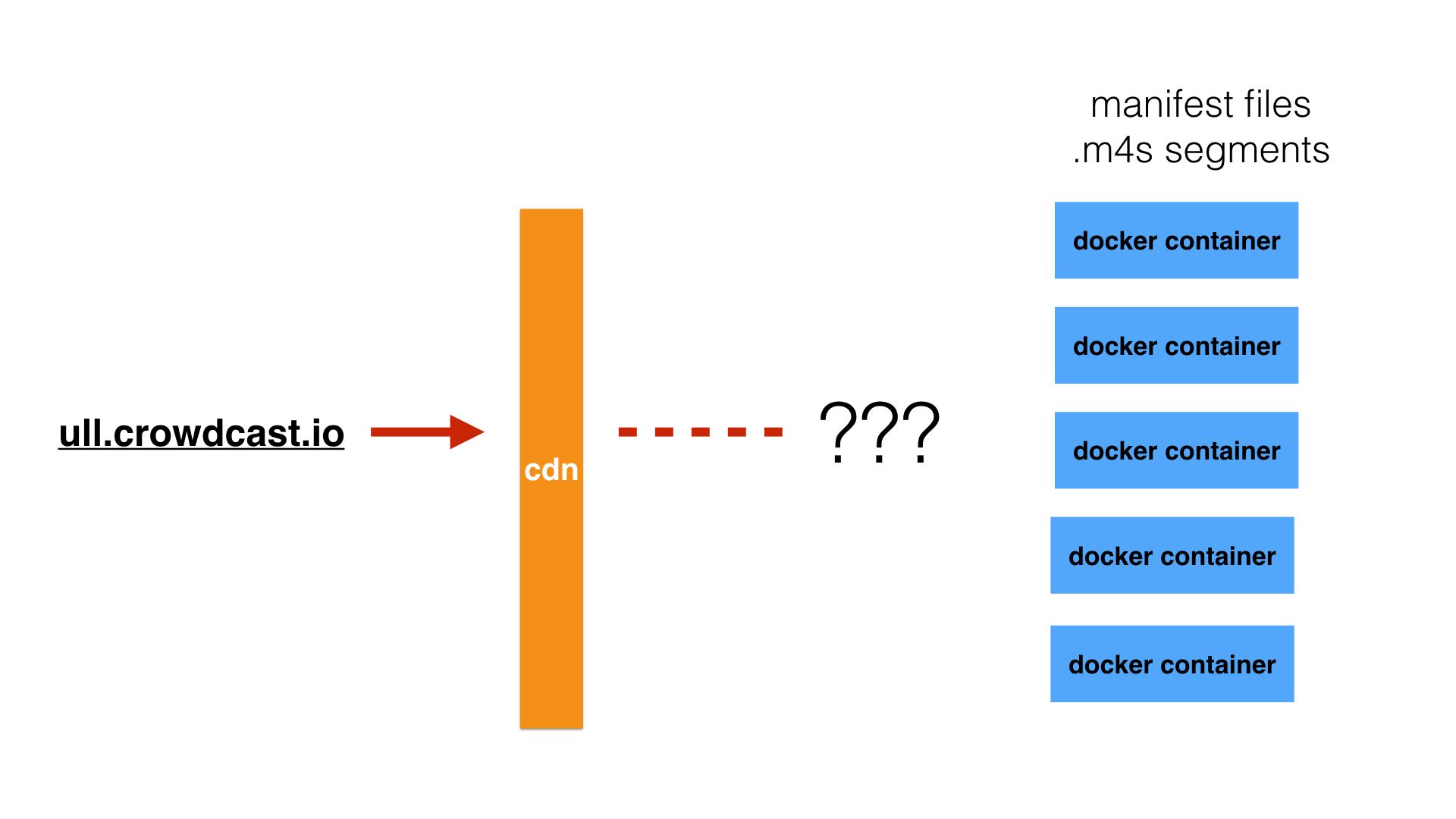

Now that we have a node server running in the same docker container that is running ffmpeg, how can we put a CDN in front of it all?

Normally with CDNs we point them to a single origin. But in our case, we have an individual docker container running on it's own host for each Crowdcast event so we effectively need to point a CDN at multiple different origins dynamically.

cdn with multiple hosts

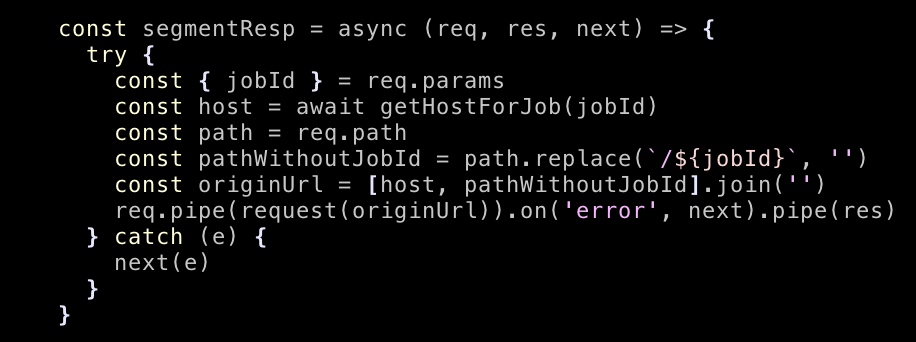

The solution is to have a set of webservers in the middle. These webservers act as a proxy to pipe requests through to the "real" origin. We call these webservers "ull-proxy". Here's the bit of node/express code that does it.

All this code does is:

Strip out a unique ID from the request (we call this "jobId")

Use that "jobId" to figure out the host for the real origin

use `req.pipe(request).pipe(res)` to proxy the request to the real origin, which is the "ull server" running on the docker container

express ull proxy

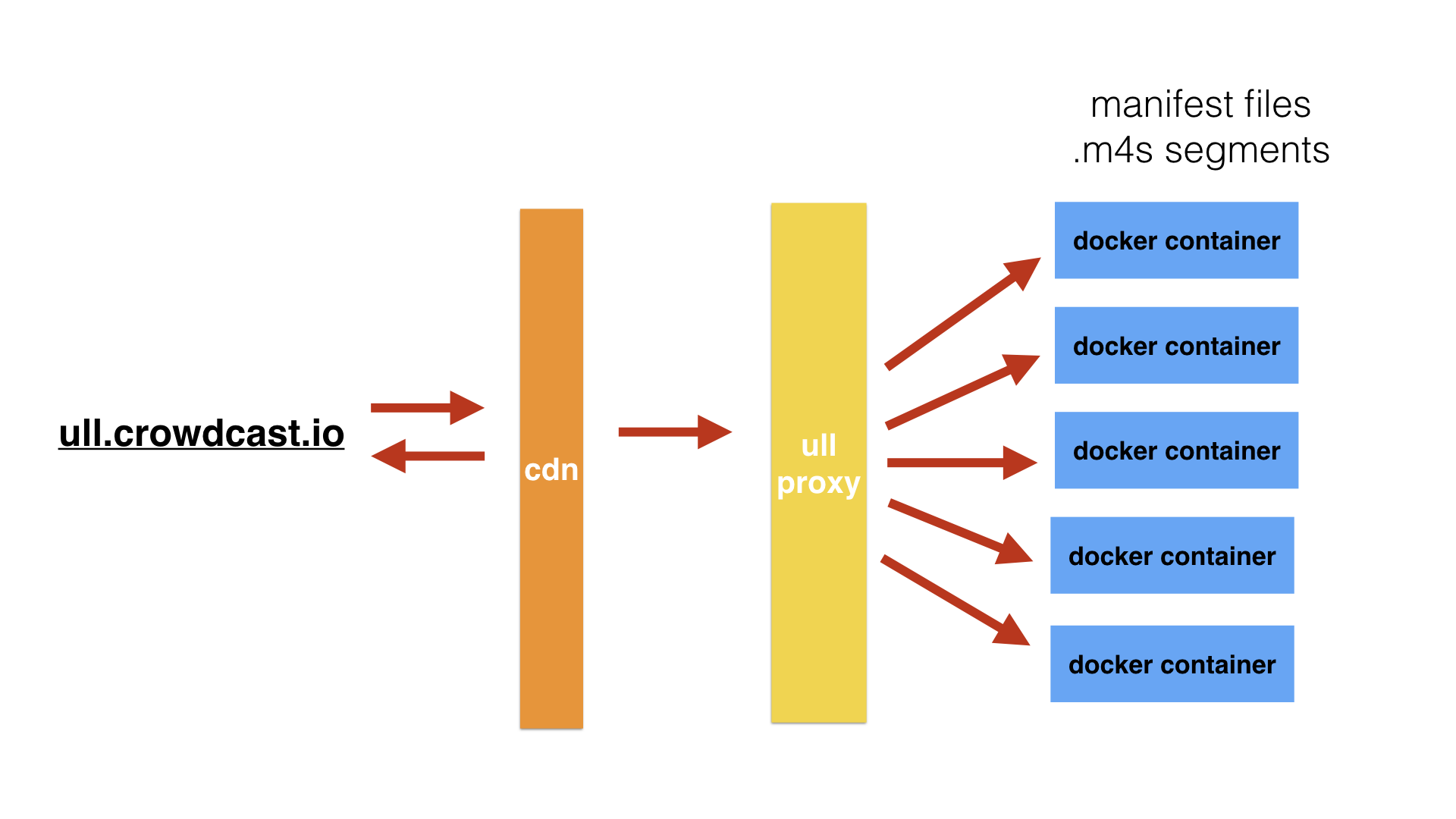

To make this even better we put the "ull-proxy" code onto an edge computing "serverless" platform (Cloudflare Workers).

cdn with ull proxy to multiple hosts

Now, requests through ull.crowdcast.io will first hit the cdn, if the cdn is cold then the request routes through the Cloudflare Worker which proxies the request to the correct host and tells the cdn to cache the asset. Of course, the cdn needs to support http chunked transfer and the cdn's shield cache needs to be able to stream http chunks to multiple clients simultaneously without bombarding your origin.

Lastly

You can see this talk in video form when I presented on this exact topic at the SF Video Tech Meetup. It includes a live demo of the ull-server.

Questions and discussion are encouraged. Find me on twitter or reach out. I'd love to talk to you about video!

Sai

Founder & CEO — Building spaces for community.